To complete all these steps, you must be set up as the Agent of you iPhone Developer Portal. I’m also assuming that you paid the subscription fee so that you are allowed access.

Go to http://developer.apple.com/iphone

Log in as yourself. Then click on the iPhone Developer Program Portal link.

1) Get the Development Certificate:

Create the certificate request using the Keychain Access utility on your Mac.

Submit it to the iPhone Developer Program website.

Download the generated certificate.

2) Register your device:

Go to the Devices tab in the Program Portal and register your device.

3) Create the App ID:

Click on the New App ID button.

Enter a description or name for your application.

If this is the first time creating an App ID, leave the bundle seed ID to Generate New.

Put * for the Bundle Identifier.

You will see an ID for the App created under the ID column beside the application name of your choice. Note this alphanumeric string as you will need it for later. For this example, let’s assume the App ID is 123D4EFGHI.

4) Create the Provisioning Profile:

Click on New Profile.

Enter a profile name that you will remember.

Check all the certificates you wish included, including the certificate you created in step 1.

Select the App ID you created in step 3.

Check the devices you wish to install this application on for development purposes.

Download the provisioning profile to your Mac.

5) Install the Development Certificate:

Start Keychain Access on your Mac.

Doubleclick on the login keychain on the top left hand panel, which should also be your default keychain.

Go to the File menu and select Import Items…

Select the Development Certificate downloaded in step 1, and make sure the Destination Keychain is set to login.

To ensure the certificate is correct, view it in the Certificates Category for the login keychain. There should be a widget beside it that when you click on it, a private key should appear below the certificate.

6) Installing the Provisioning Profile:

Start XCode, go to the Window menu item and click on Organizer.

Make sure you are on the Summary tab.

Under the Devices topic, you should see your device if it has been registered properly and is now attached to your Mac.

The summary panel is split into 2 halves: the top half is for your device and the bottom half is called Provisioning.

Click on the + icon in the bottom half and open the provisioning profile downloaded from step 4.

Next, in the iPhone Development topic, there should be a subtopic named Provisioning Profiles.

Click on Provisioning Profiles.

The panel on the right should be divided into 3 sections, with the top section having 2 columns: Name and Expiration Date.

Find the provisioning profile you downloaded in step 4 and drag it into the top section.

7) XCode Project Settings:

Go to Project menu item in XCode and choose Edit Project Settings.

In the settings window, select the Build tab.

Make sure the Base SDK in the Architectures section matches your device.

In Code Signing, go to the Code Signing Identity section and select Any iPhone OS Device.

The value should be the exact same as your Developer certificate’s CN, and should be of the form: iPhone Developer: Firstname Lastname (32SDKRR55I)

Go back to the Project menu and select Edit Active Target.

Click on the Properties tab.

In the Identifier field, make sure it starts with the App ID you created in step 3: for example: 123D4EFGHI.com.apple.samplecode

8) Deploy to the iPhone device

Click on the Build and Go button.

Friday, October 23, 2009

Sunday, June 7, 2009

Troubleshooting LINQ exceptions.

Once I was testing a deployment I made of an application that used LINQ to SQL, and I received the following exception when I ran it:

Exception thrown: at System.Data.SqlClient.SqlConnection.OnError(SqlException exception, Boolean breakConnection) at System.Data.SqlClient.SqlInternalConnection.OnError(SqlException exception, Boolean breakConnection) at System.Data.SqlClient.TdsParser.ThrowExceptionAndWarning(TdsParserStateObject stateObj) at System.Data.SqlClient.TdsParser.Run(RunBehavior runBehavior, SqlCommand cmdHandler, SqlDataReader dataStream, BulkCopySimpleResultSet bulkCopyHandler, TdsParserStateObject stateObj) at

System.Data.SqlClient.SqlDataReader.ConsumeMetaData() at System.Data.SqlClient.SqlDataReader.get_MetaData() at

System.Data.SqlClient.SqlCommand.FinishExecuteReader(SqlDataReader ds, RunBehavior runBehavior, String resetOptionsString) at System.Data.SqlClient.SqlCommand.RunExecuteReaderTds(CommandBehavior cmdBehavior,

RunBehavior runBehavior, Boolean returnStream, Boolean async) at System.Data.SqlClient.SqlCommand.RunExecuteReader

(CommandBehavior cmdBehavior, RunBehavior runBehavior, Boolean returnStream, String method, DbAsyncResult result) at System.Data.SqlClient.SqlCommand.RunExecuteReader(CommandBehavior cmdBehavior, RunBehavior runBehavior, Boolean returnStream, String method) at System.Data.SqlClient.SqlCommand.ExecuteReader(CommandBehavior behavior, String method) at System.Data.SqlClient.SqlCommand.ExecuteDbDataReader(CommandBehavior behavior) at System.Data.Common.DbCommand.ExecuteReader() at System.Data.Linq.SqlClient.SqlProvider.Execute(Expression query, QueryInfo queryInfo, IObjectReaderFactory factory, Object[] parentArgs, Object[] userArgs, ICompiledSubQuery[] subQueries, Object lastResult) at System.Data.Linq.SqlClient.SqlProvider.ExecuteAll(Expression query, QueryInfo[]

queryInfos, IObjectReaderFactory factory, Object[] userArguments, ICompiledSubQuery[] subQueries) at System.Data.Linq.SqlClient.SqlProvider.System.Data.Linq.Provider.IProvider.Execute(Expression query) at System.Data.Linq.DataQuery`1.System.Collections.Generic.IEnumerable.GetEnumerator() at

System.Collections.Generic.List`1..ctor(IEnumerable`1 collection) at System.Linq.Enumerable.ToList[TSource](IEnumerable`1 source) at Canwest.Broadcasting.Windows.Forms.Translator.translatePlaylistsForChannels() at

Canwest.Broadcasting.Windows.Forms.Translator.translateAllPlaylistFiles() at

Canwest.Broadcasting.Web.PlaylistAsrun.PlaylistAsrunService.RunTranslation() in

H:\ProgramCode\Win32_Applications\PlaylistAsrunTranslator\PlaylistAsrunASPNETWebService\PlaylistAsrunService.asmx.cs:line 151

This exception was being thrown at the point where I was dumping the results of a LINQ to SQL result set to a List by using the ToList() method. When digging deeper, I realized the result set being returned was based on a template in the LINQ designer (dbml) file, which expected the SQL table to have one more column then the table actually contained. I added the missing column to the table and the error message went away.

The lesson is: when receiving exceptions like the above, check to make sure the database tables on the database server match what is shown in the LINQ designer view. One can just use SQL Management Studio to make a graphical comparison.

Exception thrown: at System.Data.SqlClient.SqlConnection.OnError(SqlException exception, Boolean breakConnection) at System.Data.SqlClient.SqlInternalConnection.OnError(SqlException exception, Boolean breakConnection) at System.Data.SqlClient.TdsParser.ThrowExceptionAndWarning(TdsParserStateObject stateObj) at System.Data.SqlClient.TdsParser.Run(RunBehavior runBehavior, SqlCommand cmdHandler, SqlDataReader dataStream, BulkCopySimpleResultSet bulkCopyHandler, TdsParserStateObject stateObj) at

System.Data.SqlClient.SqlDataReader.ConsumeMetaData() at System.Data.SqlClient.SqlDataReader.get_MetaData() at

System.Data.SqlClient.SqlCommand.FinishExecuteReader(SqlDataReader ds, RunBehavior runBehavior, String resetOptionsString) at System.Data.SqlClient.SqlCommand.RunExecuteReaderTds(CommandBehavior cmdBehavior,

RunBehavior runBehavior, Boolean returnStream, Boolean async) at System.Data.SqlClient.SqlCommand.RunExecuteReader

(CommandBehavior cmdBehavior, RunBehavior runBehavior, Boolean returnStream, String method, DbAsyncResult result) at System.Data.SqlClient.SqlCommand.RunExecuteReader(CommandBehavior cmdBehavior, RunBehavior runBehavior, Boolean returnStream, String method) at System.Data.SqlClient.SqlCommand.ExecuteReader(CommandBehavior behavior, String method) at System.Data.SqlClient.SqlCommand.ExecuteDbDataReader(CommandBehavior behavior) at System.Data.Common.DbCommand.ExecuteReader() at System.Data.Linq.SqlClient.SqlProvider.Execute(Expression query, QueryInfo queryInfo, IObjectReaderFactory factory, Object[] parentArgs, Object[] userArgs, ICompiledSubQuery[] subQueries, Object lastResult) at System.Data.Linq.SqlClient.SqlProvider.ExecuteAll(Expression query, QueryInfo[]

queryInfos, IObjectReaderFactory factory, Object[] userArguments, ICompiledSubQuery[] subQueries) at System.Data.Linq.SqlClient.SqlProvider.System.Data.Linq.Provider.IProvider.Execute(Expression query) at System.Data.Linq.DataQuery`1.System.Collections.Generic.IEnumerable.GetEnumerator() at

System.Collections.Generic.List`1..ctor(IEnumerable`1 collection) at System.Linq.Enumerable.ToList[TSource](IEnumerable`1 source) at Canwest.Broadcasting.Windows.Forms.Translator.translatePlaylistsForChannels() at

Canwest.Broadcasting.Windows.Forms.Translator.translateAllPlaylistFiles() at

Canwest.Broadcasting.Web.PlaylistAsrun.PlaylistAsrunService.RunTranslation() in

H:\ProgramCode\Win32_Applications\PlaylistAsrunTranslator\PlaylistAsrunASPNETWebService\PlaylistAsrunService.asmx.cs:line 151

This exception was being thrown at the point where I was dumping the results of a LINQ to SQL result set to a List

The lesson is: when receiving exceptions like the above, check to make sure the database tables on the database server match what is shown in the LINQ designer view. One can just use SQL Management Studio to make a graphical comparison.

Monday, May 25, 2009

Behaviour of the AutoIncrementSeed property

Oftentimes we will need to reset the seed to 0 when refilling a DataTable with new data. Due to the implementation of set_AutoIncrementSeed, we cannot just set AutoIncrementSeed = 0. We must do the following:

AutoIncrementStep = -1;

AutoIncrementSeed = 1;

AutoIncrementStep = 1;

AutoIncrementStep = -1;

AutoIncrementSeed = 1;

AutoIncrementStep = 1;

Labels:

.NET,

AutoIncrement,

AutoIncrementSeed,

AutoIncrementStep,

DataTable

Saturday, April 11, 2009

Programmatically renaming a computer using C#

Recently I was presented with an interesting problem: to develop an application that would rename multiple computers for the end user.

The user requirements were straight-forward enough:

1) Display a list of all computers in active directory from the SMS database.

2) Allow the user to select multiple computers and assign their new names.

3) Rename all these computers.

However, the technical requirements and subsequent architecture were much more involved. The tool required the ability to rename multiple computers at once, and had to do all the operations in-process so as to return error information to the user. After some googling, I figured the easiest way to accomplish this was to spawn multiple threads, each of which would run a separate computer renaming operation, then inform the user to reboot their machine if the renaming was successful.

The easiest way to create the multiple threads was to use a ThreadPool, and then load it up with all the renaming operations as individual work items. This part was simple enough, because there was no need to coordinate between the multiple threads. The program only had to wait until all the threads had finished running.

The challenge came in renaming the machines in-process. The easiest way to do this would be through directory services, but I could not determine how to get the correct information from the SMS databases to put together the proper LDAP URL with OUs. In addition, for Directory Services to work on a machine, it must be at least XP service pack 3 level, which my IT department could not guarantee for all machines in the company. So after many days of painful googling and experimentation, I came up with a 3 step approach:

1) Add a new local user to the computer to be renamed and make it part of the local administrators group using Directory Services.

2) Rename the active directory object corresponding to the computer to the new name via Directory Services.

3) Unjoin the computer from the domain, than use the local user from step 1 to rename it and rejoin it to the domain, all with WMI (Windows Management Instrumentation).

Why the 3 step approach? Since WMI works with Windows operating systems below XP service pack 3, it was the required choice for the renaming portion. However, the WMI renaming bit works by remotely invoking the Rename method of the target computer’s local Win32_ComputerSystem object, and that method only runs if the computer is unjoined to the domain. Therefore, in order to call the Rename method after unjoining the computer from the domain, the WMI ManagementObject must connect and authenticate to the target computer using a local administrator, hence the need for step 1. To rejoin to the domain after the renaming operation, the application needs a domain user account that has permissions to join machines to active directory, and the target computer must find an active directory object that matches its name. Hence the need for step 2.

Enough of the high level explanation of how the tool will work; after all, I’m sure if you really are reading this blog, you are looking for source code, right? Here’s the source for step 1:

public Boolean addUserByDirectoryServices(String machineName, String pcAdministrator, String pcAdministratorPassword)

{

Boolean rc = true;

try

{

String connString = "WinNT://" + machineName;

using (DirectoryEntry de = new DirectoryEntry(connString, pcAdministrator, pcAdministratorPassword))

{

//if (de.Children.Find(m_PcAdministrator) != null)

//{

// de.Close();

// de.Dispose();

// return true;

//}

DirectoryEntry user = de.Children.Add(m_PcAdministrator, "user");

user.Invoke("SetPassword", new Object[] { m_PcAdministratorPassword });

user.CommitChanges();

de.RefreshCache();

DirectoryEntry adminGroup = de.Children.Find("Administrators", "group");

if (null != adminGroup)

{

adminGroup.Invoke("Add", new Object[] { user.Path.ToString() });

}

de.Close();

de.Dispose();

}

rc = true;

}

catch (Exception e)

{

String msg = e.Message;

m_error_msg += "Adding local user error: " + msg;

String stacktrace = e.StackTrace;

m_stacktrace += "\nAdding local user dump: " + stacktrace;

}

return rc;

}

In the beginning, you will notice I use the URL WinNT:// as opposed to LDAP:// to locate the machine via Directory Services. This is because I could not compute the proper LDAP query string. You will also notice I commented out a check to determine if I already added the user, and just catch the exception. I did this because I found that the check to see if the user already existed always threw an exception, whether the user existed or not. Therefore, in using this method, just catch exceptions that are thrown and ignore them, or display them to the user. I also encapsulate the DirectoryEntry object representing the target machine in an using block, and call its Dispose() method at the end because before, I was constantly getting errors stating I had multiple connections open on the target machine, which were not supported. Those errors can also be ignored it you get them; they bear no significance as to whether the new local user was created or not.

For step 2, here is the code to rename the object in active directory, also using the Directory Services methods and API:

public Boolean renameMachineByDirectoryServices(String oldname, String newname, String administrator, String administratorPassword)

{

Boolean rc = true;

try

{

DirectoryEntry machineNode = null;

machineNode = new DirectoryEntry("WinNT://" + oldname);

machineNode.Username = administrator;

machineNode.Password = administratorPassword;

machineNode.AuthenticationType = AuthenticationTypes.Secure;

machineNode.Rename("CN=" + newname);

machineNode.CommitChanges();

}

catch (Exception e)

{

String msg = e.Message;

String stacktrace = e.StackTrace;

}

return rc;

}

This was a simple and straightforward method to code. All it did was rename the object in active directory. Also, any exceptions thrown can be ignored; they have no bearing on whether the operation was successful or not. If you don’t believe me, try it.

Finally, the code for step 3 was much more complicated:

public Boolean renameRemotePC(String oldName, String newName, String domain)

{

Boolean rc = true;

try

{

ManagementPath remoteControlObject = new ManagementPath();

remoteControlObject.ClassName = "Win32_ComputerSystem";

remoteControlObject.Server = oldName;

remoteControlObject.Path = oldName + "\\root\\cimv2:Win32_ComputerSystem.Name='" + oldName + "'";

remoteControlObject.NamespacePath = "\\\\" + oldName + "\\root\\cimv2";

ConnectionOptions conn = new ConnectionOptions();

conn.Authentication = AuthenticationLevel.PacketPrivacy;

conn.Username = oldName + "\\" + m_PcAdministrator;

conn.Password = m_PcAdministratorPassword;

ManagementScope remoteScope = new ManagementScope(remoteControlObject, conn);

ManagementObject remoteSystem = new ManagementObject(remoteScope, remoteControlObject, null);

ManagementBaseObject outParams;

ManagementBaseObject unjoinFromDomain = remoteSystem.GetMethodParameters("UnjoinDomainOrWorkgroup");

unjoinFromDomain.SetPropertyValue("Password", m_domain_admin_password);

unjoinFromDomain.SetPropertyValue("UserName", m_domain_admin);

outParams = remoteSystem.InvokeMethod("UnjoinDomainOrWorkgroup", unjoinFromDomain, null);

ManagementBaseObject newRemoteSystemName = remoteSystem.GetMethodParameters("Rename");

InvokeMethodOptions methodOptions = new InvokeMethodOptions();

newRemoteSystemName.SetPropertyValue("Name", newName);

newRemoteSystemName.SetPropertyValue("UserName", m_PcAdministrator);

newRemoteSystemName.SetPropertyValue("Password", m_PcAdministratorPassword);

methodOptions.Timeout = new TimeSpan(0, 10, 0);

outParams = remoteSystem.InvokeMethod("Rename", newRemoteSystemName, null);

ManagementBaseObject joinFromDomain = remoteSystem.GetMethodParameters("JoinDomainOrWorkgroup");

joinFromDomain.SetPropertyValue("Name", domain);

joinFromDomain.SetPropertyValue("Password", m_domain_admin_password);

joinFromDomain.SetPropertyValue("UserName", m_domain_admin);

joinFromDomain.SetPropertyValue("FJoinOptions", 1);

outParams = remoteSystem.InvokeMethod("JoinDomainOrWorkgroup", joinFromDomain, null);

}

catch (ManagementException MgEx)

{

String mgs = MgEx.Message;

String coredump = MgEx.StackTrace;

m_error_msg += "\nRenaming PC Error: " + mgs;

m_stacktrace += "\nRenaming PC dump: " + coredump;

}

catch (Exception e)

{

String mgs = e.Message;

String coredump = e.StackTrace;

m_error_msg += "\nRenaming PC Error: " + mgs;

m_stacktrace += "\nRenaming PC dump: " + coredump;

}

return rc;

}

The first piece of this method was creating a ManagementObject that could connect to the target computer and instantiate an object of class Win32_ComputerSystem on that machine. The first thing I learnt was that in order to do so, the ConnectionOptions had to be set to use an authentication level of PacketPrivacy. This was the only way I was allowed to connect to another machine using WMI. After that, it was just a matter of instantiating ManagementBaseObjects for the required methods: UnjoinDomainOrWorkgroup, Rename, and JoinDomainOrWorkgroup. The required parameters and recommended values you can find in the code, so I won’t go into too much detail here, except that once again, exceptions can be safely ignored. Don’t ask me why, but they can.

All in all, I felt this application was a great way to learn about different aspects of IT administration and how to automate them. It was my first exposure to WMI and Directory Services development, and given the increasing focus on security nowadays, probably not my last. As a wrap up, I felt this assignment was also a good application of software engineering principles, as I had to gather the user requirements and technical requirements on my own, then architect and build the solution, test it, and deploy it.

The class that contains the methods above, which I called WMIWrapper, can be found in my codeplex project http://www.codeplex.com/tv. Of course these methods can be used in any IT environment, but so far I have only been asked to do this by a television broadcaster.

The user requirements were straight-forward enough:

1) Display a list of all computers in active directory from the SMS database.

2) Allow the user to select multiple computers and assign their new names.

3) Rename all these computers.

However, the technical requirements and subsequent architecture were much more involved. The tool required the ability to rename multiple computers at once, and had to do all the operations in-process so as to return error information to the user. After some googling, I figured the easiest way to accomplish this was to spawn multiple threads, each of which would run a separate computer renaming operation, then inform the user to reboot their machine if the renaming was successful.

The easiest way to create the multiple threads was to use a ThreadPool, and then load it up with all the renaming operations as individual work items. This part was simple enough, because there was no need to coordinate between the multiple threads. The program only had to wait until all the threads had finished running.

The challenge came in renaming the machines in-process. The easiest way to do this would be through directory services, but I could not determine how to get the correct information from the SMS databases to put together the proper LDAP URL with OUs. In addition, for Directory Services to work on a machine, it must be at least XP service pack 3 level, which my IT department could not guarantee for all machines in the company. So after many days of painful googling and experimentation, I came up with a 3 step approach:

1) Add a new local user to the computer to be renamed and make it part of the local administrators group using Directory Services.

2) Rename the active directory object corresponding to the computer to the new name via Directory Services.

3) Unjoin the computer from the domain, than use the local user from step 1 to rename it and rejoin it to the domain, all with WMI (Windows Management Instrumentation).

Why the 3 step approach? Since WMI works with Windows operating systems below XP service pack 3, it was the required choice for the renaming portion. However, the WMI renaming bit works by remotely invoking the Rename method of the target computer’s local Win32_ComputerSystem object, and that method only runs if the computer is unjoined to the domain. Therefore, in order to call the Rename method after unjoining the computer from the domain, the WMI ManagementObject must connect and authenticate to the target computer using a local administrator, hence the need for step 1. To rejoin to the domain after the renaming operation, the application needs a domain user account that has permissions to join machines to active directory, and the target computer must find an active directory object that matches its name. Hence the need for step 2.

Enough of the high level explanation of how the tool will work; after all, I’m sure if you really are reading this blog, you are looking for source code, right? Here’s the source for step 1:

public Boolean addUserByDirectoryServices(String machineName, String pcAdministrator, String pcAdministratorPassword)

{

Boolean rc = true;

try

{

String connString = "WinNT://" + machineName;

using (DirectoryEntry de = new DirectoryEntry(connString, pcAdministrator, pcAdministratorPassword))

{

//if (de.Children.Find(m_PcAdministrator) != null)

//{

// de.Close();

// de.Dispose();

// return true;

//}

DirectoryEntry user = de.Children.Add(m_PcAdministrator, "user");

user.Invoke("SetPassword", new Object[] { m_PcAdministratorPassword });

user.CommitChanges();

de.RefreshCache();

DirectoryEntry adminGroup = de.Children.Find("Administrators", "group");

if (null != adminGroup)

{

adminGroup.Invoke("Add", new Object[] { user.Path.ToString() });

}

de.Close();

de.Dispose();

}

rc = true;

}

catch (Exception e)

{

String msg = e.Message;

m_error_msg += "Adding local user error: " + msg;

String stacktrace = e.StackTrace;

m_stacktrace += "\nAdding local user dump: " + stacktrace;

}

return rc;

}

In the beginning, you will notice I use the URL WinNT:// as opposed to LDAP:// to locate the machine via Directory Services. This is because I could not compute the proper LDAP query string. You will also notice I commented out a check to determine if I already added the user, and just catch the exception. I did this because I found that the check to see if the user already existed always threw an exception, whether the user existed or not. Therefore, in using this method, just catch exceptions that are thrown and ignore them, or display them to the user. I also encapsulate the DirectoryEntry object representing the target machine in an using block, and call its Dispose() method at the end because before, I was constantly getting errors stating I had multiple connections open on the target machine, which were not supported. Those errors can also be ignored it you get them; they bear no significance as to whether the new local user was created or not.

For step 2, here is the code to rename the object in active directory, also using the Directory Services methods and API:

public Boolean renameMachineByDirectoryServices(String oldname, String newname, String administrator, String administratorPassword)

{

Boolean rc = true;

try

{

DirectoryEntry machineNode = null;

machineNode = new DirectoryEntry("WinNT://" + oldname);

machineNode.Username = administrator;

machineNode.Password = administratorPassword;

machineNode.AuthenticationType = AuthenticationTypes.Secure;

machineNode.Rename("CN=" + newname);

machineNode.CommitChanges();

}

catch (Exception e)

{

String msg = e.Message;

String stacktrace = e.StackTrace;

}

return rc;

}

This was a simple and straightforward method to code. All it did was rename the object in active directory. Also, any exceptions thrown can be ignored; they have no bearing on whether the operation was successful or not. If you don’t believe me, try it.

Finally, the code for step 3 was much more complicated:

public Boolean renameRemotePC(String oldName, String newName, String domain)

{

Boolean rc = true;

try

{

ManagementPath remoteControlObject = new ManagementPath();

remoteControlObject.ClassName = "Win32_ComputerSystem";

remoteControlObject.Server = oldName;

remoteControlObject.Path = oldName + "\\root\\cimv2:Win32_ComputerSystem.Name='" + oldName + "'";

remoteControlObject.NamespacePath = "\\\\" + oldName + "\\root\\cimv2";

ConnectionOptions conn = new ConnectionOptions();

conn.Authentication = AuthenticationLevel.PacketPrivacy;

conn.Username = oldName + "\\" + m_PcAdministrator;

conn.Password = m_PcAdministratorPassword;

ManagementScope remoteScope = new ManagementScope(remoteControlObject, conn);

ManagementObject remoteSystem = new ManagementObject(remoteScope, remoteControlObject, null);

ManagementBaseObject outParams;

ManagementBaseObject unjoinFromDomain = remoteSystem.GetMethodParameters("UnjoinDomainOrWorkgroup");

unjoinFromDomain.SetPropertyValue("Password", m_domain_admin_password);

unjoinFromDomain.SetPropertyValue("UserName", m_domain_admin);

outParams = remoteSystem.InvokeMethod("UnjoinDomainOrWorkgroup", unjoinFromDomain, null);

ManagementBaseObject newRemoteSystemName = remoteSystem.GetMethodParameters("Rename");

InvokeMethodOptions methodOptions = new InvokeMethodOptions();

newRemoteSystemName.SetPropertyValue("Name", newName);

newRemoteSystemName.SetPropertyValue("UserName", m_PcAdministrator);

newRemoteSystemName.SetPropertyValue("Password", m_PcAdministratorPassword);

methodOptions.Timeout = new TimeSpan(0, 10, 0);

outParams = remoteSystem.InvokeMethod("Rename", newRemoteSystemName, null);

ManagementBaseObject joinFromDomain = remoteSystem.GetMethodParameters("JoinDomainOrWorkgroup");

joinFromDomain.SetPropertyValue("Name", domain);

joinFromDomain.SetPropertyValue("Password", m_domain_admin_password);

joinFromDomain.SetPropertyValue("UserName", m_domain_admin);

joinFromDomain.SetPropertyValue("FJoinOptions", 1);

outParams = remoteSystem.InvokeMethod("JoinDomainOrWorkgroup", joinFromDomain, null);

}

catch (ManagementException MgEx)

{

String mgs = MgEx.Message;

String coredump = MgEx.StackTrace;

m_error_msg += "\nRenaming PC Error: " + mgs;

m_stacktrace += "\nRenaming PC dump: " + coredump;

}

catch (Exception e)

{

String mgs = e.Message;

String coredump = e.StackTrace;

m_error_msg += "\nRenaming PC Error: " + mgs;

m_stacktrace += "\nRenaming PC dump: " + coredump;

}

return rc;

}

The first piece of this method was creating a ManagementObject that could connect to the target computer and instantiate an object of class Win32_ComputerSystem on that machine. The first thing I learnt was that in order to do so, the ConnectionOptions had to be set to use an authentication level of PacketPrivacy. This was the only way I was allowed to connect to another machine using WMI. After that, it was just a matter of instantiating ManagementBaseObjects for the required methods: UnjoinDomainOrWorkgroup, Rename, and JoinDomainOrWorkgroup. The required parameters and recommended values you can find in the code, so I won’t go into too much detail here, except that once again, exceptions can be safely ignored. Don’t ask me why, but they can.

All in all, I felt this application was a great way to learn about different aspects of IT administration and how to automate them. It was my first exposure to WMI and Directory Services development, and given the increasing focus on security nowadays, probably not my last. As a wrap up, I felt this assignment was also a good application of software engineering principles, as I had to gather the user requirements and technical requirements on my own, then architect and build the solution, test it, and deploy it.

The class that contains the methods above, which I called WMIWrapper, can be found in my codeplex project http://www.codeplex.com/tv. Of course these methods can be used in any IT environment, but so far I have only been asked to do this by a television broadcaster.

Saturday, March 21, 2009

Binding the AJAX FilteredTextboxExtender control to a Textbox control located within a ReorderList control.

I recently ran into a challenge where I had to filter out non-numeric input from a textbox control that was contained in a ReorderList’s ItemTemplate section. We wanted to filter out the keystrokes as the user was typing them instead of waiting for a postback to check the data in the field.

At first I tried dragging the control onto the page and setting the TargetControlID to the ID of the textboxes but when new items were added to the ReorderList, those ID’s would change. Thus my FilteredTextboxExtender could never find the Textbox controls it was supposed to bind to.

To resolve this, I inserted the FilteredTextboxExtenders dynamically so their TargetControlID’s would always match the correct TextBox controls. First, I created an event handler for the ReorderList’s DataBound event, as it was that event that always generated new ID’s for the Textbox controls. Then, in the event handler, I searched the ReorderList’s Items member for the TextBox controls based on the ID’s assigned to them in the markup, using the FindControl() method. Afterwards, I would instantiate the FilteredTextboxExtenders and set their TargetControlID properties to the UniqueID properties of the Textbox controls. The code would look like this:

TextBox multiplier = (TextBox)IngredientDataList.Items[countOfItems - 1].FindControl("New_IngredientMultiplier");

if (null != multiplier)

{

String idToValidate = multiplier.UniqueID;

validateMultiplier = new FilteredTextBoxExtender();

validateMultiplier.TargetControlID = idToValidate;

validateMultiplier.FilterType = FilterTypes.Custom FilterTypes.Numbers;

validateMultiplier.ValidChars = ".";

IngredientDataList.Controls.Add(validateMultiplier);

}

In this example, the bitwise OR operator is used to set the FilterType because the FilterType property is a bit flag, and in this case I had to allow for decimal numbers, so I only let the users enter numbers and a decimal point. As another aside, notice how in my if statement I put the null before the variable I’m checking? This is a defensive programming concept I learnt while at an interview at Microsoft. The purpose for this is if I accidentally forget the exclamation mark, the compilation would fail and I would catch the error immediately. However, if the variable was in front and I forgot the exclamation mark, the line would read:

If (multiplier = null)

Which would always evaluate to true because now it is an assignment as opposed to a condition.

What’s important is that I set the TargetControlID property to the UniqueID property of the Textbox controls, as opposed to just the ID property. It must be UniqueID because this property is assigned by ASP.NET so the FilteredTextBox control will be bound to the proper control after a ReorderList.DataBind() call. The ID property is assigned by the developer and may not actually be the ID of the control if a new item is inserted into the ReorderList.

At first I tried dragging the control onto the page and setting the TargetControlID to the ID of the textboxes but when new items were added to the ReorderList, those ID’s would change. Thus my FilteredTextboxExtender could never find the Textbox controls it was supposed to bind to.

To resolve this, I inserted the FilteredTextboxExtenders dynamically so their TargetControlID’s would always match the correct TextBox controls. First, I created an event handler for the ReorderList’s DataBound event, as it was that event that always generated new ID’s for the Textbox controls. Then, in the event handler, I searched the ReorderList’s Items member for the TextBox controls based on the ID’s assigned to them in the markup, using the FindControl() method. Afterwards, I would instantiate the FilteredTextboxExtenders and set their TargetControlID properties to the UniqueID properties of the Textbox controls. The code would look like this:

TextBox multiplier = (TextBox)IngredientDataList.Items[countOfItems - 1].FindControl("New_IngredientMultiplier");

if (null != multiplier)

{

String idToValidate = multiplier.UniqueID;

validateMultiplier = new FilteredTextBoxExtender();

validateMultiplier.TargetControlID = idToValidate;

validateMultiplier.FilterType = FilterTypes.Custom FilterTypes.Numbers;

validateMultiplier.ValidChars = ".";

IngredientDataList.Controls.Add(validateMultiplier);

}

In this example, the bitwise OR operator is used to set the FilterType because the FilterType property is a bit flag, and in this case I had to allow for decimal numbers, so I only let the users enter numbers and a decimal point. As another aside, notice how in my if statement I put the null before the variable I’m checking? This is a defensive programming concept I learnt while at an interview at Microsoft. The purpose for this is if I accidentally forget the exclamation mark, the compilation would fail and I would catch the error immediately. However, if the variable was in front and I forgot the exclamation mark, the line would read:

If (multiplier = null)

Which would always evaluate to true because now it is an assignment as opposed to a condition.

What’s important is that I set the TargetControlID property to the UniqueID property of the Textbox controls, as opposed to just the ID property. It must be UniqueID because this property is assigned by ASP.NET so the FilteredTextBox control will be bound to the proper control after a ReorderList.DataBind() call. The ID property is assigned by the developer and may not actually be the ID of the control if a new item is inserted into the ReorderList.

Labels:

AJAX,

ASP.NET,

control,

DataBind,

DataBound,

event,

FilteredTextBoxExtender,

ReorderList,

TextBox,

web

Saturday, February 28, 2009

Handling multiple selects in a grid control that supports grouping and sorting

Currently in an application I am coding, I have a grid control that displays rows from a database table and allows me to group by column values in the table. For example, if the grid is displaying columns C1, C2 and C3, I can group the rows by the values of C1 by dragging that column name to the top panel of the grid control. So if C1 was the column for city, I can group all the Toronto records together.

This application must also support multiple selects. For example, when I click on 2-3 rows of the grid, I must be able to extract these rows in another class for processing. The grid control I am using, known as DevXpress, contains a method int[] GetSelectedRows(), which returns the row indices of all the rows in the grid selected by the user. So if I fill the grid with a DataSet based on a database table and select multiple rows, I can get the row indices and use those to extract the desired rows from the DataSet and use them to load another DataTable. Try this out on a regular DevXpress GridControl without the grouping and notice it returns the correct rows. The C# code is sort of like this:

DataTable dt2 = gridCtrlDataSet.m_dataTable.Clone();

Int[] selectedRows = gridCtrl.GetSelectedRows();

Foreach (int index in selectedRows)

{

Dt2.ImportRow(gridCtrlDataSet.m_dataTable.Rows[index]);

}

Note I specifically clone the DataSet’s DataTable and assign it to the target DataTable. This is required if you wish to use the DataTable.ImportRow() method.

In the above example, I specifically stated to not use any column groupings. I did this to illustrate a point. Try grouping the rows by one of the column values by dragging the column name onto the top panel of the GridControl. Select a few rows on the GridControl and use GetSelectedRows() to get their indices, and use these indices to get the desired rows from the DataSet bound to the Database table. If you look at the rows, you will notice they are not the same ones as you selected in the grouped GridControl. That’s because when the GridControl was grouped, the indices of the rows in the GridControl’s GridView changed, so they no longer corresponded with the indices in the bound DataSet.

How do we get the selected rows from the DataSet?

Before binding the DataSet to the database table, create an extra column to hold the row indices, and make it an identity column that auto-increments. Then, after calling the GetSelectedRows() method to get the indices of the selected DataGrid rows, get the index column and use those indices to get the correct rows from the DataSet. Upon doing this, you will find that you can get the rows you really selected in the GridControl. The C# code is sort of like this:

DataTable dt2 = gridCtrlDataSet.m_dataTable.Clone();

Int[] selectedRows = gridCtrl.GetSelectedRows();

Foreach (int i in selectedRows)

{

Int index = gridCtrView.DataRowView[i].Row[“ID”];

Dt2.ImportRow(gridCtrlDataSet.m_dataTable.Rows[index]);

}

What is the moral of the story?

Be sure to understand the behaviour of your GridControls before assuming what their methods for return selected rows actually do return.

This application must also support multiple selects. For example, when I click on 2-3 rows of the grid, I must be able to extract these rows in another class for processing. The grid control I am using, known as DevXpress, contains a method int[] GetSelectedRows(), which returns the row indices of all the rows in the grid selected by the user. So if I fill the grid with a DataSet based on a database table and select multiple rows, I can get the row indices and use those to extract the desired rows from the DataSet and use them to load another DataTable. Try this out on a regular DevXpress GridControl without the grouping and notice it returns the correct rows. The C# code is sort of like this:

DataTable dt2 = gridCtrlDataSet.m_dataTable.Clone();

Int[] selectedRows = gridCtrl.GetSelectedRows();

Foreach (int index in selectedRows)

{

Dt2.ImportRow(gridCtrlDataSet.m_dataTable.Rows[index]);

}

Note I specifically clone the DataSet’s DataTable and assign it to the target DataTable. This is required if you wish to use the DataTable.ImportRow() method.

In the above example, I specifically stated to not use any column groupings. I did this to illustrate a point. Try grouping the rows by one of the column values by dragging the column name onto the top panel of the GridControl. Select a few rows on the GridControl and use GetSelectedRows() to get their indices, and use these indices to get the desired rows from the DataSet bound to the Database table. If you look at the rows, you will notice they are not the same ones as you selected in the grouped GridControl. That’s because when the GridControl was grouped, the indices of the rows in the GridControl’s GridView changed, so they no longer corresponded with the indices in the bound DataSet.

How do we get the selected rows from the DataSet?

Before binding the DataSet to the database table, create an extra column to hold the row indices, and make it an identity column that auto-increments. Then, after calling the GetSelectedRows() method to get the indices of the selected DataGrid rows, get the index column and use those indices to get the correct rows from the DataSet. Upon doing this, you will find that you can get the rows you really selected in the GridControl. The C# code is sort of like this:

DataTable dt2 = gridCtrlDataSet.m_dataTable.Clone();

Int[] selectedRows = gridCtrl.GetSelectedRows();

Foreach (int i in selectedRows)

{

Int index = gridCtrView.DataRowView[i].Row[“ID”];

Dt2.ImportRow(gridCtrlDataSet.m_dataTable.Rows[index]);

}

What is the moral of the story?

Be sure to understand the behaviour of your GridControls before assuming what their methods for return selected rows actually do return.

Friday, January 23, 2009

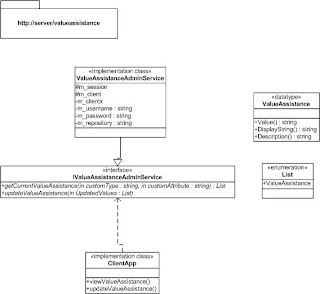

Value Assistance Management without DAB Pattern

Providing non-technical end user ability to modify value assistance list of a custom attribute without giving them access to Application Builder.

Problem:

Due to the complex workflows and nature of the broadcast business, Documentum needs to be customized for television broadcast engineering in order to use it as a media management system. Part of the customization includes representing different episodes as objects in the repository, and storing cuesheet information of each episode as an attribute. One of the required attributes is closed captioning information, which can only be one of a pre-defined list of values. These values may require modification from time to time by the broadcast engineering staff. This issue can be resolved using a vanilla Documentum install by adding the predefined list of values as a value assistance list to a custom type’s attribute, and allowing the end users access to Documentum Application Builder (DAB) to update the value assistance list. However, DAB encompasses a lot of functionality that is not desirable in a typical end user’s hands. The only other vanilla approach would be to provide the end users with Documentum Query Language (DQL) queries they can enter via Documentum Administrator (DA). This approach also is risky as it requires system administrators to expose DA to non-technical staff and requires training the end users in DQL. Therefore, the only approach is through customization. So how should this customization be implemented?

Solution:

There are several options that can be taken, but most can be summarized into the 2 below:

1) Providing Lookups for Data Fields (this is a pattern already in development at the EMC developer community)

2) Provide a service that encapsulates either the Documentum Foundation Services (DFS) or Documentum Foundation Classes (DFC). The service must also provide a way for clients to execute Documentum Query Language (DQL) required to update the value assistance data for a custom attribute. Then provide a client to consume the service, which can be anything from a thick client to a mobile client.

Obviously, since Providing Lookups for Data Fields is already a pattern in progress, I will focus on the second option.

Manage Value Assistance Pattern

Description:

The primary business users for this pattern would be operations staff in the television broadcasting industry, though other industry verticals may find use for this pattern as well.

As a precursor, it is assumed that the current IT environment contains a Documentum repository and a box running either DFS or DFC. Since there is already DFS/DFC in the environment, why does this pattern include a service to encapsulate this functionality? This is to account for the relatively high costs of a Documentum implementation; there are licensing costs associated with every server hosting DFS/DFC.

Using a design based on services oriented architecture, this pattern will expose the functionality to update the value assistance data for any custom type in a Documentum repository to multiple non-technical users. This will avoid licensing and training costs associated with deploying DAB to multiple desktops and training non-IT staff on how to use DAB.

Functional How-To:

The Manage Value Assistance pattern is based on the layered services pattern. First, create a method that accepts the name of a custom type and a custom attribute as parameters, and returns a collection of objects with members for the value, display string and description of each value assistance item. Then, create a method that accepts the name of a custom type, the name of a custom attribute of the custom type, and a collection of value assistance items as described above. This method basically overwrites the existing value assistance data for the custom attribute of the custom type and replaces them with the values contained in the collection passed through as a parameter. Essentially the collection of value assistance data is used to build a string object that will be the DQL query required to run against the repository. Once the DQL query is constructed, the method will run the query against the repository with the PUBLISH keyword to ensure other users have access to the new value assistance data.

Since this second method will be exposed to multiple users, it must be made re-entrant or thread-safe to prevent multiple users from editing the same custom type and attribute at the same time. As a security precaution, there should be authorization checking implemented here so only users who are supposed to be modifying the value assistance are allowed to. Therefore, this service must never allow anonymous access.

Next, expose these methods via service consumable by clients hosted on other machines. The favourite choice would be a XML web service but WCF is also a good option. This will enable many users access to this functionality, so it can be shared across the enterprise.

When to use it:

Obviously there are multiple ways to allow non-technical users to gain access to the value assistance data. The database table these values reside in can be updated directly with a stored procedure, saving the trouble of learning the Documentum APIs. Although this is the more efficient way, I do not recommend it as it bypasses a lot of the built in plumbing that Documentum provides. It’s always safer to use the Documentum provided facilities to manipulate it as they naturally invoke required background processes to ensure everything goes smoothly.

Examples:

I implemented an example on http://www.codeplex.com/documentum.

The requirements to make the example work are:

Content Server 5.3 SP6.

DFC 5.3 SP6.

Microsoft.NET 3.5 (WCF and WPF).

Problem:

Due to the complex workflows and nature of the broadcast business, Documentum needs to be customized for television broadcast engineering in order to use it as a media management system. Part of the customization includes representing different episodes as objects in the repository, and storing cuesheet information of each episode as an attribute. One of the required attributes is closed captioning information, which can only be one of a pre-defined list of values. These values may require modification from time to time by the broadcast engineering staff. This issue can be resolved using a vanilla Documentum install by adding the predefined list of values as a value assistance list to a custom type’s attribute, and allowing the end users access to Documentum Application Builder (DAB) to update the value assistance list. However, DAB encompasses a lot of functionality that is not desirable in a typical end user’s hands. The only other vanilla approach would be to provide the end users with Documentum Query Language (DQL) queries they can enter via Documentum Administrator (DA). This approach also is risky as it requires system administrators to expose DA to non-technical staff and requires training the end users in DQL. Therefore, the only approach is through customization. So how should this customization be implemented?

Solution:

There are several options that can be taken, but most can be summarized into the 2 below:

1) Providing Lookups for Data Fields (this is a pattern already in development at the EMC developer community)

2) Provide a service that encapsulates either the Documentum Foundation Services (DFS) or Documentum Foundation Classes (DFC). The service must also provide a way for clients to execute Documentum Query Language (DQL) required to update the value assistance data for a custom attribute. Then provide a client to consume the service, which can be anything from a thick client to a mobile client.

Obviously, since Providing Lookups for Data Fields is already a pattern in progress, I will focus on the second option.

Manage Value Assistance Pattern

Description:

The primary business users for this pattern would be operations staff in the television broadcasting industry, though other industry verticals may find use for this pattern as well.

As a precursor, it is assumed that the current IT environment contains a Documentum repository and a box running either DFS or DFC. Since there is already DFS/DFC in the environment, why does this pattern include a service to encapsulate this functionality? This is to account for the relatively high costs of a Documentum implementation; there are licensing costs associated with every server hosting DFS/DFC.

Using a design based on services oriented architecture, this pattern will expose the functionality to update the value assistance data for any custom type in a Documentum repository to multiple non-technical users. This will avoid licensing and training costs associated with deploying DAB to multiple desktops and training non-IT staff on how to use DAB.

Functional How-To:

The Manage Value Assistance pattern is based on the layered services pattern. First, create a method that accepts the name of a custom type and a custom attribute as parameters, and returns a collection of objects with members for the value, display string and description of each value assistance item. Then, create a method that accepts the name of a custom type, the name of a custom attribute of the custom type, and a collection of value assistance items as described above. This method basically overwrites the existing value assistance data for the custom attribute of the custom type and replaces them with the values contained in the collection passed through as a parameter. Essentially the collection of value assistance data is used to build a string object that will be the DQL query required to run against the repository. Once the DQL query is constructed, the method will run the query against the repository with the PUBLISH keyword to ensure other users have access to the new value assistance data.

Since this second method will be exposed to multiple users, it must be made re-entrant or thread-safe to prevent multiple users from editing the same custom type and attribute at the same time. As a security precaution, there should be authorization checking implemented here so only users who are supposed to be modifying the value assistance are allowed to. Therefore, this service must never allow anonymous access.

Next, expose these methods via service consumable by clients hosted on other machines. The favourite choice would be a XML web service but WCF is also a good option. This will enable many users access to this functionality, so it can be shared across the enterprise.

When to use it:

Obviously there are multiple ways to allow non-technical users to gain access to the value assistance data. The database table these values reside in can be updated directly with a stored procedure, saving the trouble of learning the Documentum APIs. Although this is the more efficient way, I do not recommend it as it bypasses a lot of the built in plumbing that Documentum provides. It’s always safer to use the Documentum provided facilities to manipulate it as they naturally invoke required background processes to ensure everything goes smoothly.

Examples:

I implemented an example on http://www.codeplex.com/documentum.

The requirements to make the example work are:

Content Server 5.3 SP6.

DFC 5.3 SP6.

Microsoft.NET 3.5 (WCF and WPF).

Labels:

Application Builder,

C#,

DAB,

DFC,

Documentum,

WCF,

WPF

Friday, January 16, 2009

Improving Performance of LINQ Queries

Part of the challenge facing television broadcasters is reconciling asrun logs against the playlists they are supposed to represent. Every once in a while content is changed on the fly so what is actually broadcasted is different from what the original playlist stated. Part of this reconciliation involves the generation of recon keys, which involve comparing event information of an asrun log against the playlist it was generated for. In order to compare the original playlists and the resulting asrun logs, I turned to LINQ to get the information.

One of the LINQ queries I used for generating the recon keys was:

var rOriginalEvent = from orgEvents in db.BIP_Playlist_Events

where orgEvents.eventid.Equals(searchStr)

orderby orgEvents.Last_Updated descending

select orgEvents;

if (rOriginalEvent.Count() > 0)

{

hasMatchingEvents = true;

dlstartDateTime = rOriginalEvent.First().dlstart;

orgDlhnumber = rOriginalEvent.First().dlhnumber;

orgEventid = rOriginalEvent.First().eventid;

The purpose of this query was to search archives of the original playlists from which the D-class asrun log was generated to compare the original event id, start time and dlhnumber against the asrun log’s information. These playlists were archived in a SQL Server 2005 database table, so this LINQ query was using it as the source. I timed the query and it ran a total of 0s according to my calculations. However, when I timed the following rOriginalEvent.First() calls, they took a total of .45 seconds. This does not seem to be a significant amount of time, but when repeated 1500 times for a single transaction, it meant the transaction would last almost 20 minutes.

Since LINQ automatically generates the most efficient query in the background, I looked for ways to extract the record I wanted directly from the var query. I then turned to the following LINQ query:

var rOriginalEvent = (from orgEvents in db.BIP_Playlist_Events

where orgEvents.eventid.Equals(searchStr)

orderby orgEvents.Last_Updated descending

select orgEvents).FirstOrDefault();

This would move all the processing into the LINQ SQL connector and extract either the record I wanted or a null object. The important thing is that I no longer needed the First() call when getting the information:

dlstartDateTime = rOriginalEvent.dlstart;

orgDlhnumber = rOriginalEvent.dlhnumber;

orgEventid = rOriginalEvent.eventid;

By moving the costly First() call into the LINQ query itself I reduced the time of the total operation from .45 seconds to .1 seconds, thus a performance improvement of 4.5 times faster than before.

In conclusion, I recommend that if you are after a single record for a specific set of information, use the above LINQ expression. The FirstOrDefault() method returns the first element in the collection or the default value if the collection is empty, so you don’t have to worry about dealing with a null object.

One of the LINQ queries I used for generating the recon keys was:

var rOriginalEvent = from orgEvents in db.BIP_Playlist_Events

where orgEvents.eventid.Equals(searchStr)

orderby orgEvents.Last_Updated descending

select orgEvents;

if (rOriginalEvent.Count() > 0)

{

hasMatchingEvents = true;

dlstartDateTime = rOriginalEvent.First().dlstart;

orgDlhnumber = rOriginalEvent.First().dlhnumber;

orgEventid = rOriginalEvent.First().eventid;

The purpose of this query was to search archives of the original playlists from which the D-class asrun log was generated to compare the original event id, start time and dlhnumber against the asrun log’s information. These playlists were archived in a SQL Server 2005 database table, so this LINQ query was using it as the source. I timed the query and it ran a total of 0s according to my calculations. However, when I timed the following rOriginalEvent.First() calls, they took a total of .45 seconds. This does not seem to be a significant amount of time, but when repeated 1500 times for a single transaction, it meant the transaction would last almost 20 minutes.

Since LINQ automatically generates the most efficient query in the background, I looked for ways to extract the record I wanted directly from the var query. I then turned to the following LINQ query:

var rOriginalEvent = (from orgEvents in db.BIP_Playlist_Events

where orgEvents.eventid.Equals(searchStr)

orderby orgEvents.Last_Updated descending

select orgEvents).FirstOrDefault();

This would move all the processing into the LINQ SQL connector and extract either the record I wanted or a null object. The important thing is that I no longer needed the First() call when getting the information:

dlstartDateTime = rOriginalEvent.dlstart;

orgDlhnumber = rOriginalEvent.dlhnumber;

orgEventid = rOriginalEvent.eventid;

By moving the costly First() call into the LINQ query itself I reduced the time of the total operation from .45 seconds to .1 seconds, thus a performance improvement of 4.5 times faster than before.

In conclusion, I recommend that if you are after a single record for a specific set of information, use the above LINQ expression. The FirstOrDefault() method returns the first element in the collection or the default value if the collection is empty, so you don’t have to worry about dealing with a null object.

Labels:

LINQ,

performance,

playlist,

recon key,

S4M,

SQL Server

Saturday, January 10, 2009

What is Software Engineering?

Software engineering is defined as the application of engineering principles and practices to software development with the end-goal of making software development a predictable and controlled discipline.

CMMI attempts to bring this discipline to software development projects by offering suggestions for roles, responsibilities and processes for software projects.

However, the goal of this blog is not to discuss process; I'll leave that for my software engineering management blog. This blog will focus on commonly experienced software development problems, and their respective solutions. The reason for this is to reduce the amount of unknowns in software projects to make them more predictable and controlled. Thus this blog will contribute to the software engineering discipline.

CMMI attempts to bring this discipline to software development projects by offering suggestions for roles, responsibilities and processes for software projects.

However, the goal of this blog is not to discuss process; I'll leave that for my software engineering management blog. This blog will focus on commonly experienced software development problems, and their respective solutions. The reason for this is to reduce the amount of unknowns in software projects to make them more predictable and controlled. Thus this blog will contribute to the software engineering discipline.

Subscribe to:

Posts (Atom)